When you’re sitting using your computer watching YouTube videos like this or your on your smartphone scrolling through TikTok videos, Facebook posts or watching the news, I bet the last thing that goes through your mind is “I wonder how much all of this is costing to run”.

In the last 40 years we’ve gone from where the earliest microprocessor-based devices were things like game consoles and the first home computers and no Internet, to a world where almost everything we use has got a microprocessor in it and many of these are Internet capable and connect to servers somewhere in the cloud for updates or to store information.

Almost everything we do now has some connection to the Internet somewhere along the way. Many of these things we use with no direct cost to us other than our own time and forced advertising.

But every single bit of data that is changed from a 1 to a 0 or vice versa in every one of the billions of digital devices incurs a tiny cost in energy.

It was once thought that information such as the digital zeros and ones in a computer cost nothing in the way of energy, but if that was the case, why did computers use so much power?

It was Rolf Landauer, a physicist working at IBM in 1961 who discovered what is now known as Landauer’s principle, which is that any logical irreversible operation that manipulates or destroys information, such as flipping bits from zero to 1 or vice versa increases entropy which is dissipated as heat.

The amount of energy of flipping a bit is actually so incredibly minuscule at 2.9×10-21 joules, that no one would notice it, but our computing devices now contain so many billions of MOSTETs that change billions or trillions of bits per second, that this simple operation starts to use quite a lot of energy, and almost all of that is dissipated in the form of heat.

The NVIDIA RTX 4090 video card in my computer when it’s rendering or playing games can almost double up as a space heater for my room, dissipating up to 450 watts, all as heat and that not including the extra 125W from the Intel i9 12900k as well.

And it’s not like these things work in isolation, we now have AI server farms which have Hundreds of thousands of CPUs and tens of thousands of GPUs. The amount of heat produced by them becomes a major problem and requires huge industrial-scale air conditioning and cooling systems which just vents this excess heat in to the environment.

In 2024 chat GPT was receiving about 12 million queries a day and had 100 million active subscribers a month and was costing over $100,000 a day to run, much of that would have been in energy costs.

This is the reason why so many data centres are now being built either with the associated wind, solar farms or near by geothermal or hydroelectric plants. Electrical power is the primary cost which is one of the reasons why the UK has so few large data centres, electricity here is some of the most expensive in the world. If you want to make a profitable business from data centres you need as cheap a form of electricity as possible.

A recent DoE report found that in the US alone in 2023, 4.4% or about 176TWh of all electricity generation went into powering data centres and that this is estimated to rise to between 6.7 and 12% or between 325 to 580 TWh by 2028. This also doesn’t include all the tens of millions end-user computers and smartphones that these data centres are talking to.

One of the most power-intensive sectors is video streaming with companies like Netflix and in particular, YouTube drawing the most amount of power.

Bitcoin mining is another significant user of power, which is comparable to the electricity consumption of some countries. In 2024, the Cambridge Bitcoin Electricity Consumption Index estimated that Bitcoin mining used 19.0 GW of power, which is 0.6% to 2.3% of the United States’ electricity demand.

AI is also expected to dramatically increase the amount of energy it is using in the near future.

In 2023 in the US, AI consumed around 8 terawatt hours, this is expected to grow to 52 terawatt hours by 2026, a 550% increase and by 2030 is expected to be around 652 terawatt hours, a 1150% increase on 2024 levels.

This is driven not only by the increasing number of accelerator chips made by the likes of Nvidia, AMD and Intel but also each chip is becoming more computationally powerful and consuming more power.

For example Nvidia’s A100 accelerator consumes up to 250 watts, the newer H100 consumes up to 350 watts, a 75% increase in GPU power consumption within two years across one generation of chips.

AMD’s MI250 accelerators draw 500W while the MI300x consumes 750W, and Intel’s recently cancelled hybrid AI processor, using the Gaudi 3 accelerator codenamed Falcon Shores, was expected to consume 1,500W of power per card and will now be replaced by Jaguar Shores.

Increasing chip densities, packing on more MOSFETs into the same area also increases the heat density, basically the amount of heat dissipated per square centimetre.

We are also reaching the limits of how small we can make the transistors or MOSFETs in high performance CPU’s, GPU’s and accelerator chips for neural networks. To squeeze more computing power into smaller spaces, chips could be stacked on top of each other creating effectively a 3D computer cube instead of it all being on single flat profile dies as they are at the moment. The limitation here is removing the heat generated from the chips that will be inside the of stack dies. If the heat cannot be dissipated, hot spots can occur and literally burn out the MOSFETs.

Because of the heat density of modern chips, many of them now cannot run with all the MOSFETs working at once, they would just get too hot so only parts of the chip are running whilst the rest are turned off.

Currently, to cool chips down you would mount a heat sink, a finned piece of aluminium or copper fixed to the outside surface of the chip. This would be either air cooled in most home and office computers or liquid cooled for high performance computing.

Currently the highest heat density of currently available commercial chips peaks at about 100 watts per square centimetre, but DARPA have been working on chip technologies which can reach up to 1000 watts per square centimetre for high performance radar and supercomputers.

These uses liquid coolant directly in the chips themselves through tiny micro channels using an electrically insulated liquid.

This is how it has been done for decades, pushing ever-increasing performance but with energy wastefulness, even though the amount of used power per MOSFET has decreased enormously, the number of MOSFETs crammed into die real estate has increased exponentially creating these ever hotter chips and the more drastic methods to keep them cool and from burning out.

But what if we could create a form of computing that would use radically less power in the first place?

We could have more computing power in smaller packages because you don’t have to deal with the heat and this was first proposed by Rolf Landauer in the 1960s.

If you remember what I said earlier about Landauer’s principle in which any logical irreversible operation that destroyed information dissipated energy as heat, well you might be wondering what “logical irreversible” means.

Many physical operations could be thought of as reversible, the laws of physics work equally well going backwards or forwards, here is an example of that.

Imagine you’re a commander in the army and an enemy artillery gun is firing at your position and you want to know where the gun is to launch counter fire. If you can track the projectile on its final approach with radar, you can reverse the progress of the ballistic action back to where the gun is and find its position, and this is what happens in the modern military.

Currently, all our computing logic is irreversible, logic that flows in only one direction from input to output, if we know what the inputs are, we know what the output will be, but if we only know the output value, we can currently never know for certain what the inputs were.

To give you a simple example using a two input AND gate, out of the four combinations of 0 and 1 that could occur on the inputs, there is only one condition where the output would be 1, the other three would result in 0. But if we looked at the output only, we would not be able to tell what the condition inputs would be on three of the four possible logical inputs.

Now you may be wondering what this has to do with power consumption. Well, Landauer thought that if you stored the input as well as the output you could reverse the operation and use that stored energy to power the next operation but that would take up too much memory so it was impractical at the time.

Later, others including Richard Feynman proposed that if you could create a reversible logic gate that would be able compute the opposite of what the answer was or “decompute” the output and you could store that information as energy, it could be used to power the next logical operation and effectively use little to no power to perform it.

This principle is analogous to regenerative breaking in an electric vehicle. When the vehicle is driving along normally it is using power from the battery, but when you take your foot off the accelerator the motor turns into a generator and feeds power back into the battery using the kinetic energy of the car as it slows down.

Even in a modest EV, this can reduce the power consumption by up to 35% and in a computer using reversible logic this would be much higher and theoretically be able to reduce the amount of power required to perform logical operations by more than three orders of magnitude or 1000 times less, although there will always be some very small loses.

The type of gate used would be along the lines of a CNOT or “controlled-not” gate or CCNOT or “controlled-controlled-not” gate, these are also known as a Feynman gate or Toffoli gate and are used in quantum computers to perform classical Boolean logic with the quantum states.

In these one of the inputs is also an output and knowing this you can work out what the input must have been to reverse the operation.

These must also be Adiabatic circuits, ones that use reversible logic to conserve energy. In an Adiabatic circuit the MOSFET would only turn ON when there is no voltage across the source and drain and only turn off when there is no current flowing through it which also reduces power consumption.

The circuit uses elements that can store energy like an LC network or inductor and capacitor as part of the power supply that converts the initial logic switching into a magnetic flux and then releases that energy when the reverse computation takes place to power the circuit.

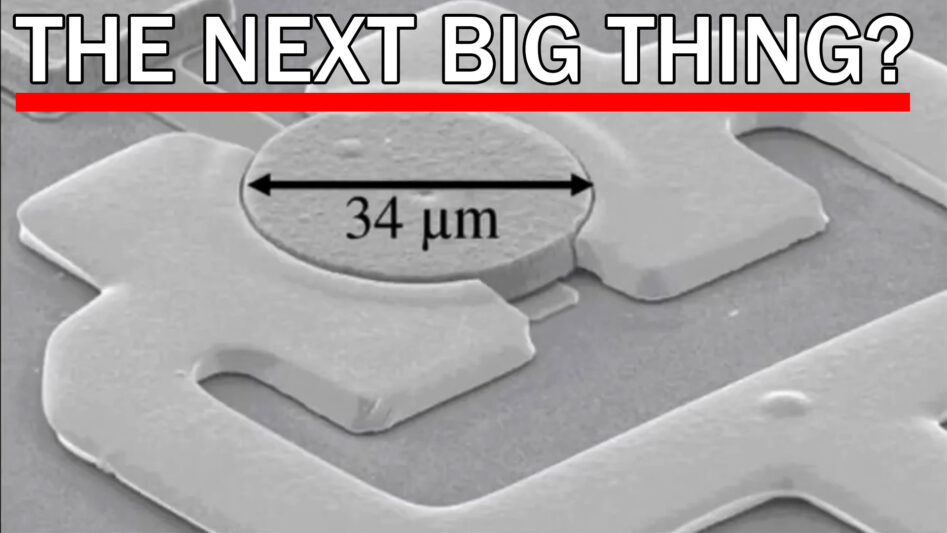

Normally these inductors have been too large to fit on the chip but in a new development these have been embedded into the chip, this still makes it quite a bit larger than say 7nm technology but it is all built in.

When switching, instead of a hard on-off, using the Adiabatic techniques the voltage is controlled to build up more slowly at a resonant frequency matching that of the LC network. Using the reversible logic to de-compute the logical function, the switching energy effectively bounces back and forth between computing the output and de-computing the output through the LC circuit requiring very little extra power as it is stored and reused instead of being dissipated as heat.

It’s only the last few years when this has been seen as a practical solution and in 2025, Vaire Computing, a joint UK/US company will release the first near-zero energy processor. If this works as expected it could trigger a new wave of near-zero energy technology that will use a tiny fraction of the power of today’s processors.

The goal of Vaire is not to create processors with a small number of very high-performance cores like today with chips that run hot, but processors with a large number of ultra-efficient cores where the chips run cold.

A couple of caveats are that the chips by their very nature of having embedded inductors will be larger than current technologies and also slower, but because there is virtually no heat dissipated, they could be packaged together in ways which are currently impossible for traditional VSLI technologies and there has been a lot of interest from AI chip manufacturers.

It might be a bit soon to say but I think that the days of using my PC as a space heater might be coming to an end in the not-too-distant future.

So thanks for watching I hope you enjoyed the video and if you did then please thumbs up share and subscribe and a big thank you goes to all of our patrons for their ongoing support.